The Damage Caused by Downtime

Data center reliability has helped to increase the operational effectiveness of thousands of companies around the globe. Improvements in technology and the design of server farms, as well as in

We look forward to assisting you

Data center reliability has helped to increase the operational effectiveness of thousands of companies around the globe. Improvements in technology and the design of server farms, as well as in

Selecting and purchasing a hard drive used to be a simple task. Generally people would find a few of the highest capacity drives they could afford, pick the fastest of

Recently, Intel and facebook banded together to create the next-generation of data center rack technology. The prototype includes an innovative type of architecture called the photonic rack architecture. The design

In today’s modern world, protecting data from hackers and viruses has never been more important. There are many different types of data protection available on today’s market, all of which

If you are designing a data center, one of the most critical issues to focus on is security. There are an abundance of threats that the data center will face,

There’s nothing quite like the satisfaction that comes with doing things on your own, but you might be better off working with someone else for certain tasks, such as data

Non-renewable Resources Non-renewable resources such as petroleum, uranium, coal, and natural gas provide us with the energy used to power our cars and houses, offices, and factories. Without energy we

A group of leading high-tech companies, led by IBM, has formed a new alliance to further the development of advanced server technologies that will power a new generation of faster,

In modern society, people are very dependent on technology. With all of the advancements in modern electronics and technology, data centers are essential. Many servers are required to store data

Businesses often use data centers to backup and protect their vital information and data as well as the information and data of their customers and clients. Since using a data

Data centers have been around for ages. In the beginning of time, they were like huge computer rooms and were very complex to maintain and operate. In fact, they sometimes

The amount of data available in the world today is staggering, estimated by a 2011 Digital Universe Study from IDC and EMC to be around 1.8 zettabytes (1.8 trillion gigabytes),

The Importance of Secure Data Protection For Individuals and Businesses Protecting data is becoming increasingly important in our highly internet savvy world. With millions of people using the

Cloud computing has become increasing popular over the past several years. It enables hosted services to be delivered over the internet, rather than physically storing information on computers. The data

The 2013 data center census works to give a glimpse into the future of the data center industry. Although the census is not able to completely predict the future, it

In terms of disasters, few people are prepared for the chaos that can come as a result of any type of disaster. Floods, fires, tornadoes, hurricanes, and even heavy rainstorms

The city of Lulela, Sweden is situated in the frigid northern part of the nation. Just sixty miles from the Arctic Circle, temperatures in Lulea can sink as low

Modular data centers became popular when the economy started to swirl the drain. Businesses needed new ways to secure funding in small amounts while simultaneously decreasing the risks that came

Possible Future Ramifications For Data Center Regulations And Construction Requiring PUE Surveys There are three main categories in which PUE surveys would likely be used in the future construction and

Google currently has 13 data centers located in North America, South America, Asia and Europe. These data centers house the thousands of machines needed to run Google’s operations. Whether a

Unique changes in technology and the energy used to run these important pieces of equipment have created a need for new and innovative cooling methods for data centers. Cooling methods

How An Uninterruptible Power Supply Is Maintained Maintenance is a must for all computers and all computer network components. Without maintenance,

Important Changes Taking Place In The Uninterruptible Power Supply Industry It’s taken for granted that the technology field is changing every day. Because data

There are many businesses that benefit from the usage of a data center. A data center is essentially a computer center where a company

You’ve probably heard the term data center, but may not completely understand what it means. Data centers are often referred to as computer centers, and are large facilities that are

Phoenix is a great place to build a data center due to ample cheap land, proximity to an urbanized area with a skilled workforce, available power grids, and existing telecommunications

The TIA-942 Data Center Standards Overview, published by the Telecommunications Industry Association, sets several basic standards for the overall design of data centers. The document outlines basic best practice for

Most businesses today rely on computers and modern technology in order to run efficiently and to meet the needs of customers whether local or worldwide. The data center plays an

Businesses today rely on computers and technology more than ever before. The earliest beginnings of email in the 60s marked a rapid change toward today’s almost complete reliance on computers.

Though many considerations go into the design and implementation of a mission critical facility, few are more important than the physical construction. Mission critical facility construction has to be reliable,

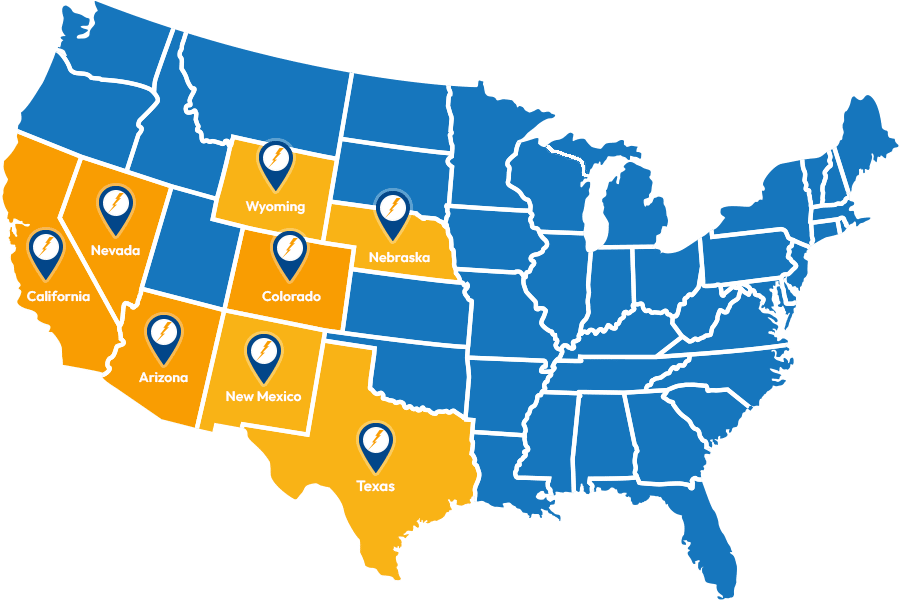

Service Locations

Expanded Service Area

Useful Links

Contact Information