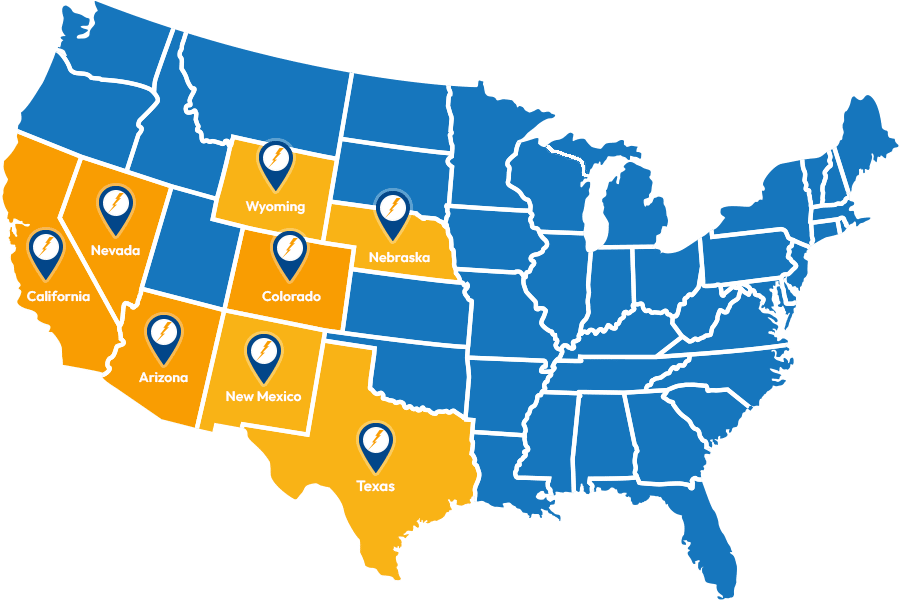

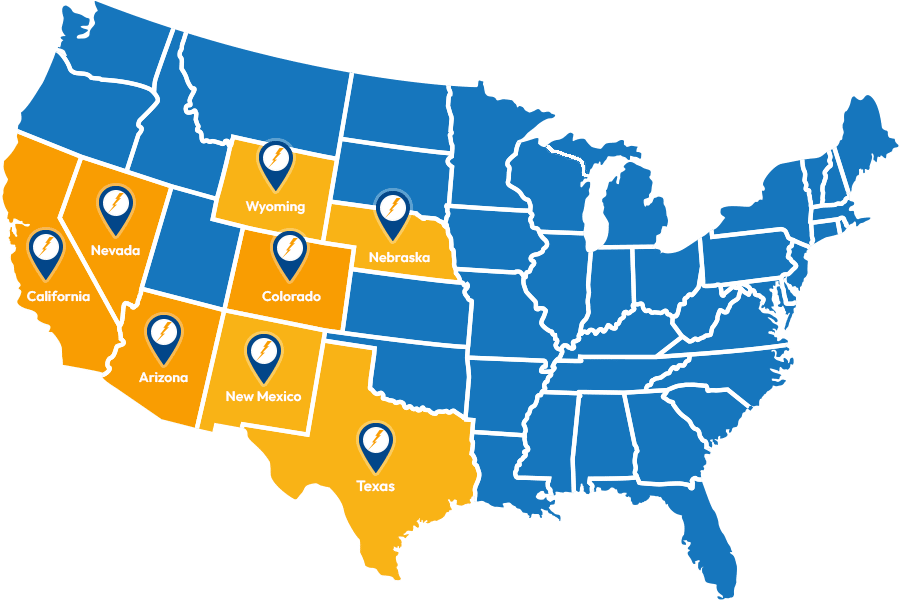

Service Locations

Expanded Service Area

We look forward to assisting you

Titan Power stands as a pillar of expertise and renowned name in the mission critical industry. As a licensed General Contractor, we specialize in managing complete turn-key projects, whether it’s equipment replacement or new builds, encompassing planning, design, engineering, and construction.

Titan Power offers a range of flexible single and multi-year maintenance agreements for Uninterruptible Power Supply (UPS) and battery backup systems from various manufacturers, dedicated to maximizing your operational uptime. Our expertise lies in the meticulous maintenance of backup power equipment, a service trusted by hundreds of customers across the Southwest US.

with Titan Power’s customized maintenance agreements.

Titan Power is your trusted partner for emergency power and air solutions. As a leading distributor, we excel in providing a comprehensive array of products, equipment and software tailored to your specific needs.

find the right solution that aligns with your budget, requirements, and application.

We look forward to assisting you

Founded in 1986, Titan Power provides power and air solutions for data centers, computer rooms and other IT mission critical facilities. Headquartered in Chandler, Arizona with branch office in Denver, Colorado and satellite locations in California and Nevada, we sell equipment nationwide and service customers in the states of Arizona, California, Colorado, Nevada and New Mexico.

Titan Power offers objective and unbiased recommendations and unlike manufacturers, Titan Power both sells, installs and services all major brands. This very important differentiation not only allows us to be completely objective and unbiased in our equipment recommendations but also offers our customers a single source solution for their power and air requirements.

To provide peace of mind through building and maintaining emergency power environments.

Service Locations

Expanded Service Area

Useful Links

Contact Information