Data Center UPS Total Cost of Ownership (TCO)

Every data center operates with a budget that plays a major role in determining specific data center infrastructure choices. Any time major decisions must be made the budget will come

We look forward to assisting you

Every data center operates with a budget that plays a major role in determining specific data center infrastructure choices. Any time major decisions must be made the budget will come

Implementing an Uninterruptible Power Supply system in any data center, of any size, faces challenges and potential pitfalls. While the end game of installing a UPS system in a data

Every business in the world can benefit from improving energy efficiency and data centers are certainly no exception. If anything, data centers are the prime example of the importance

Cloud computing has impacted, and will continue to impact, the technology world as we know it. The cloud is not only greatly impacting the way individuals utilize technology but

Maintenance is the key to extending just about anything in life and data center UPS batteries are no exception. When data center UPS batteries are neglected, what could be

When we discuss data centers it seems that a conversation about UPS (uninterruptible power supply) and generators go hand in hand. Redundant power with a reliable UPS battery or

In a world where technology moves at a lightning pace and everything is becoming more and more advanced, sometimes, it is best to get back to basics. Color coding could

Many businesses need data center space but do not have the resources or desire to run an entire facility themselves. These colocation customers rent space from data centers with managers

Any business or office must periodically assess itself from top to bottom in order to continue to succeed. This is done in different ways depending on the type of business,

Sometimes it may seem like all we talk about is backup power supply but there is a reason – when it comes to data centers, a reliable and effective backup

Technology is never static, it is in a constant state of evolution. Because of this, data centers must be vigilant

If you have been reading our blogs for a while you have probably heard us shout the importance of reliable backup power from the

If you have ever tried to turn on a flashlight only to realize the battery no longer

We have all been there – we need a wireless connection but just cannot seem to get a good signal. We scream at our phone and shake our fists

It may seem like, when it comes to data centers, all we talk about is energy efficiency. While this may be

Just like a homeowner is familiar with the steady increase in utility bills during the hot summer months,

Most UPS systems are the first line of defense against utility outages and load failure, so it’s important to understand how long the system will last and when to repair,

When it comes to data center design few things get more attention than power distribution. Power distribution units (PDUs) must be carefully selected for any data center based on power

When talking about data centers we tend to talk a lot about the importance of uptime and the measures to take to avoid dreaded downtime. This is for good reason.

Just like a homeowner is familiar with the steady increase in utility bills during the hot summer months, so are data center managers. Data centers use a lot of energy

What is in a name? Data center, computer room, server room? Most people probably think they are all basically the same thing. The names seem interchangeable, right? Wrong. When a

Change is inevitable. And when it comes to technology, if there is one thing you can count on it is transformation. Technology changes on what often seems like a daily

Tape drives for backup may have been a staple for decades but they are very quickly losing ground to disk storage. While tape has been around for so long, it

For any data center, maintaining uptime in a world fraught with potential hazards that could cause downtime is the highest priority. Today, most data centers try to take advantage of

The turn of seasons brings about many changes. We adjust the thermostats in our homes and, similarly we must also control the environment in a data center. But, it is

It is the classic dilemma for data centers – move or renovate? When technology moves a mile per minute and everything from applications to infrastructure is constantly evolving a data

Data center energy efficiency is at the forefront of hot topics for data centers. And, for good reason. Data centers use a truly astonishing amount of energy each year. The

Data center energy consumption is a major topic of conversation. Data centers are one of the largest consumers of energy in the country and in the world. Data center energy

Technology continues to deeply integrate itself into our everyday lives. Wearable technology is quickly becoming commonplace, something you see on everyone. Business Insider reports on just how rapidly wearable technology

Few things in recent history point to the need for a comprehensive disaster recovery plan for data centers than Hurricane Sandy. When disaster struck many data centers were unprepared and

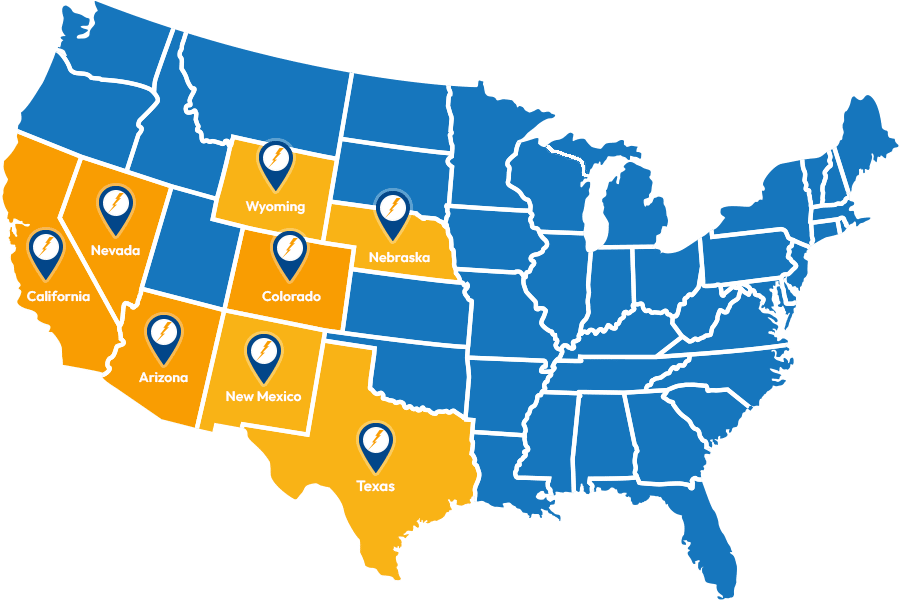

Service Locations

Expanded Service Area

Useful Links

Contact Information