Providing energy to servers is a substantial part of a data center’s costs. In many cases this is due to servers running consistently at peak performance in preparation for peak capacity. This creates a lot of unnecessary expenditure as these systems are not always needed to run at such high performance levels. This creates inflated minimum power requirements for maintaining critical systems, forcing unnecessary expansion of power management systems and further increased costs.

Providing energy to servers is a substantial part of a data center’s costs. In many cases this is due to servers running consistently at peak performance in preparation for peak capacity. This creates a lot of unnecessary expenditure as these systems are not always needed to run at such high performance levels. This creates inflated minimum power requirements for maintaining critical systems, forcing unnecessary expansion of power management systems and further increased costs.

Dynamic or Scheduled Performance

Protecting functionality while ensuring peak performance is a huge challenge, but one that should not be ignored as there are potentially substantial returns in capital from adjustable performance in these systems. Servers waste a tremendous amount of power by running at peak performance under times of low demand, especially if the suite of applications are technically demanding and require powerful systems. Deactivating servers or resizing clusters on a schedule of known usage or under dynamically controlled systems, of which can detect potential shifts in usage and need for more functionality, can help dramatically reduce power consumption. Reducing idle power consumption is a significant way to cut costs and even the largest business can benefit through dynamic management of performance. These methods reduce servers drain on power while ensuring there is no downside on the user end, even if there is unusually large loads of traffic.

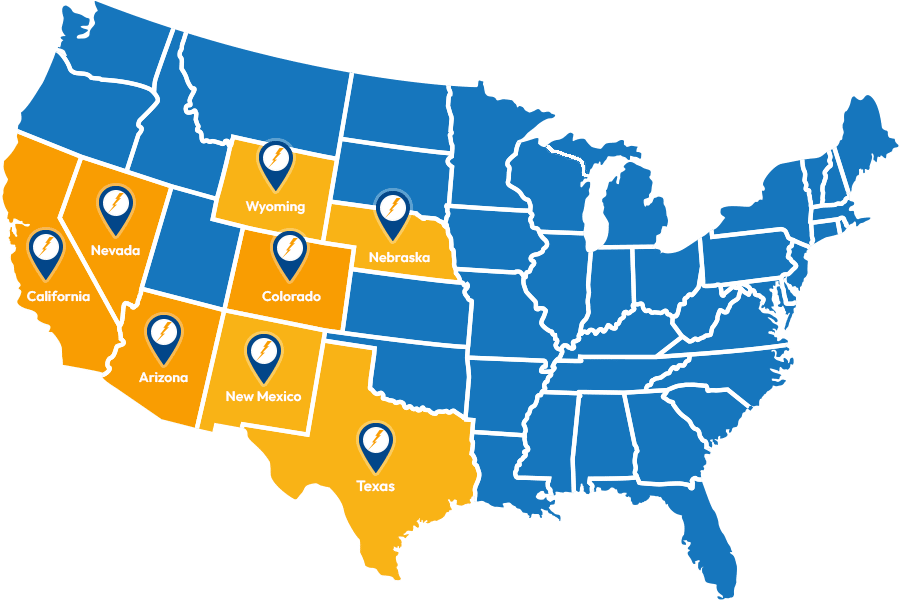

Load balancing with Multiple Data Centers

As a business grows and it’s pool of users expand it may become beneficial to have data centers strategically located, running applications only in areas of which are located in times of off peak hour. Off peak hours provide significantly reduced prices in power due to less demand outside of the typical business hours. Concerns with latency might make this an issue for some businesses, but for the majority of computational tasks running applications with a few hundred milliseconds of latency is not a concern. In certain cases redirecting traffic with needs for low latency to a first tier of high cost data centers and redirecting those with no latency concerns to cheap power areas would be ideal. Integrating functionality across multiple data centers allows capacity and latency to be shifted with user demand determining performance, saving capital in the process. Such varying location can also offer more stability as systems aren’t isolated in their power supplier and servers draw power independently increasing the reliability of these platforms.