Importance of Data Center Load Bank Testing

Do you know how long your data center could function in the event of a prolonged power outage? If you have never had comprehensive data center load bank testing completed,

We look forward to assisting you

Do you know how long your data center could function in the event of a prolonged power outage? If you have never had comprehensive data center load bank testing completed,

Optimizing data center infrastructure and operations to maximimize uptime and extend the lifespan of equipment is not simple and is definitely not something that is a one-time or even occassional

Designing and engineering a data center, computer room, or mission-critical facility is a complex process with multiple phases. It is a big undertaking and one that is best left to

Data centers of all sizes rely on a consistent power supply and therefore must employ some type of redundant power solution to ensure there is constant power for mission critical

All data centers want to be more energy efficient but finding practical and economical ways to improve energy efficiency can be challenging. But, it is made even harder if a

After the last year, we now know more than ever before, how important reliable power is in a healthcare setting. COVID-19 has put a strain on all healthcare settings like

A data center is not very useful if it cannot maintain uptime. Maximizing uptime is easier said than done. But, make no mistake, to stay competitive in today’s marketplace, it

PDU Maintenance & Repair There are many important components of a successful data center. But, without power, none of them will matter much. Power distribution in a data center is

Can Your Data Center Infrastructure Keep Up with Modern Demand? What is a data center if it is not agile, fast, and scalable? A dinosaur. Unfortunately, when the world of

Data centers power the world as we know it and that is why it is critical that we keep them safe. But, there are no two data centers that are

The majority of the world depends on computers for at least one aspect of their daily life. And, beyond the individual, businesses and governments depend on computers to remain operable.

2021 has been quite a year, hasn’t it? A year filled with unanticipated changes and challenges for every industry, including the data center industry. If you are like us, your

Edge computing has seen tremendous growth in the last few years and as we look into 2021 and beyond, it looks like it will only continue to grow. This year

A UPS (uninterruptible power supply) is not a new way to protect your data center against power failure. The UPS has stood the test of time, adapting and advancing to

Whether you are beginning to age out of your existing data center design and equipment or you are moving to a new location for other various strategic reasons, many data

Edge Computing Adoption is Rapidly Increasing It’s the dawn of a new era for the world in many ways, and that includes edge computing. Edge computing is poised to grow

When the true impact of the COVID-19 pandemic set in, there was little anyone could do to prepare for how it would affect the world’s economy. Every single industry has

While data centers have fared relatively well during the COVID-19 pandemic, there is one category of the data center industry that has not only faired well, it has thrived –

No corner of the world has been untouched by the Covid-19 pandemic. Every industry has been impacted, including the data center industry. What were operational best practices 2 months ago

The world is facing an unprecedented pandemic that has led to many governments instating ‘stay at home’ and/or quarantine orders. While employees are allowed to go to work if their

The IoT Invasion of Data Centers Though not new, the Internet of Things, or IoT, is one of those trendy tech buzzwords that people love to throw around but often

Evolve or Be Left Behind Hardware is not only a major investment for data centers – it is a critical component of data center infrastructure and, as technology advances, the

Data Center Design & Layout Considerations Few things are more critical in the functioning of almost any industry than data centers. While they are not necessarily the glamorous side of

A properly working UPS will minimize a company’s downtime and promote efficient, productive use of electronic equipment. When a UPS system displays a new status, sometimes it’s hard to discern

Is Your UPS System Running Efficiently and at Capacity? UPS efficiency is one of the most critical components of effective data center operations. When a UPS system is not running

Data Center Specialized Testing It is easy to get busy with the immense responsibilities and practicalities of running a data center but, do you really know how well things are

Any business that provides a service that is dependent on a continual power supply knows the importance of reliable and adequate backup power. There are businesses in virtually every industry

In a cloud era, we are seeing more and more people and businesses opt for edge computing. Edge computing is a buzzword in the tech industry but people sometimes struggle

Data Center Optimization What data center doesn’t want to be more efficient, secure, and reliable? These three things are at the core of almost all decision-making for data centers. As

Are you properly evaluating the costs of UPS systems? When evaluating the purchase of a new UPS, it is important to remember that there are additional costs beyond just the

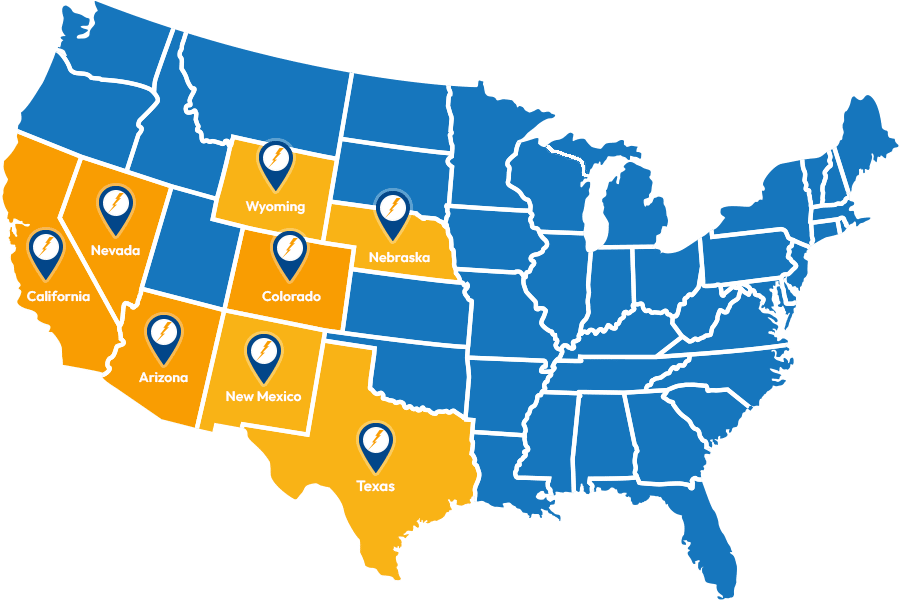

Service Locations

Expanded Service Area

Useful Links

Contact Information