2 Data Center Power Trends to Watch in 2019

2019 Data Center Power Trends We just had the summer solstice and now that we are more than halfway through the year we are seeing industry trends emerge in data

We look forward to assisting you

2019 Data Center Power Trends We just had the summer solstice and now that we are more than halfway through the year we are seeing industry trends emerge in data

Bloom Energy Transforming Data Center Power Data center power has always been, and likely will always be, a hot topic. Data centers are some of the biggest power consumers in

Artificial Intelligence, also known as AI, is not a new concept. It has been the subject of many sci-fi novels and movies over the last 4 decades or so –

Beer and UPSs: More alike than different (no, really) Imagine our surprise when we discovered just how alike beer and UPSs are. From the need to be kept cool to

Blockchain and the Data Center Industry Blockchain is poised to revolutionize the technology industry as we know it, as well as many other industries that utilize technology in one form

Artificial Intelligence in the Data Center The modern data center that we know today, and certainly the one of tomorrow, will be heavily impacted by artificial intelligence, also known as

Data Center Network Speed Today, there is more data and larger data than anytime before in history – and it is only increasing. Larger size and volume of data means

Who knew there were so many things to know about batteries? Learn more about VRLA (Valve Regulated Lead Acid) batteries, which are typically seen in applications for UPS (Uninterruptible Power

Data Center Liquid Cooling – A Hot Trend There is no denying it, liquid cooling in data centers is hot right now. Traditionally, air has been used to cool

Technology is changing every second and what was on trend last year or the year before for data centers may be old news by now. With the end of 2018

The Healthcare Industry Demands Uptime If there has ever been a mission-critical facility that demands reliable power, it is the healthcare industry. Healthcare facilities need consistent, reliable power for a

As business demands for electricity continues to increase, electrical problems such as blackouts and brownouts will increase. These issues can be frustrating, cause loss of productivity and even require businesses

When hiring a service provider to maintain your critical power equipment, have you ever considered the actual technician that shows up to do the work? Technical knowledge is just one

Mission Critical Facility Power – Best Practices & Maintenance When we talk about businesses or operations that depend on reliable power, there are few operations that are completely dependent

Most UPS systems are the first line of defense against utility outages and load failure, so it’s important to understand how long the system will last and when to repair,

What is a Hyperscale Data Center? There is a lot of buzz about hyperscale data centers, and for good reason, the number of hyperscale data centers is growing rapidly and

How the Cloud is Being Used in the Data Center Today Today’s data centers are faced with managing more, and larger, data files than ever before. As the size of

Every data center is on a quest to maximize uptime and one of the most important components of data center uptime is data center batteries. There are a variety of

If there is one thing that all data centers must prioritize, constantly evaluate, frequently adjust, and dedicate a lot of resources to, it is cooling. Data center cooling is a

Preparing for the Worst – Data Center Cyber Attacks Preparing for the worst is something that is a priority, or at least should be, for every data center. But, for

The Need for an Analytic-Driven Data Center Everyone knows the importance of analytics in today’s modern, digital world. Without measurable data, it is difficult to make informed decisions about operations,

What is a UPS Battery Capacitor? One of the most critical power elements of any data center is its UPS (Uninterruptible Power Supply). There are many different types of UPS

Importance of Adequate Power Supply in Education Facilities The education sector constantly faces a wide array of risks that could impact their access to the power necessary to run their

A ‘mission critical’ operation, system or facility may sound fairly straightforward – something that is essential to the overall operations of a business or process within a business. Essentially, something

Every data center in the world utilizes Uninterruptible Power Supplies (UPSs) for reliable backup power in the event of an outage. Power outages can occur in a data center for

Data Center Backup Power Supply Batteries All data centers are run on power and (hopefully) the security and peace of mind of having a robust UPS and backup power supply

There are arguably few places in which reliable and sufficient backup power is more important than the healthcare industry. While backup power is very important for a myriad of industries,

A well-planned and well-managed data center anticipates potential hazards and works to minimize their risk. Additionally, by anticipating potential hazards, a data center can have appropriate response plans in case

One of the most important components of any data center is their Uninterruptible Power Supply (UPS). The UPS is tasked with maintaining uptime in a data center should there ever

Hyperconvergence is not just a buzzword, it is the future of data center operations. First there were converged data centers and now there are hyperconverged data center infrastructures that address

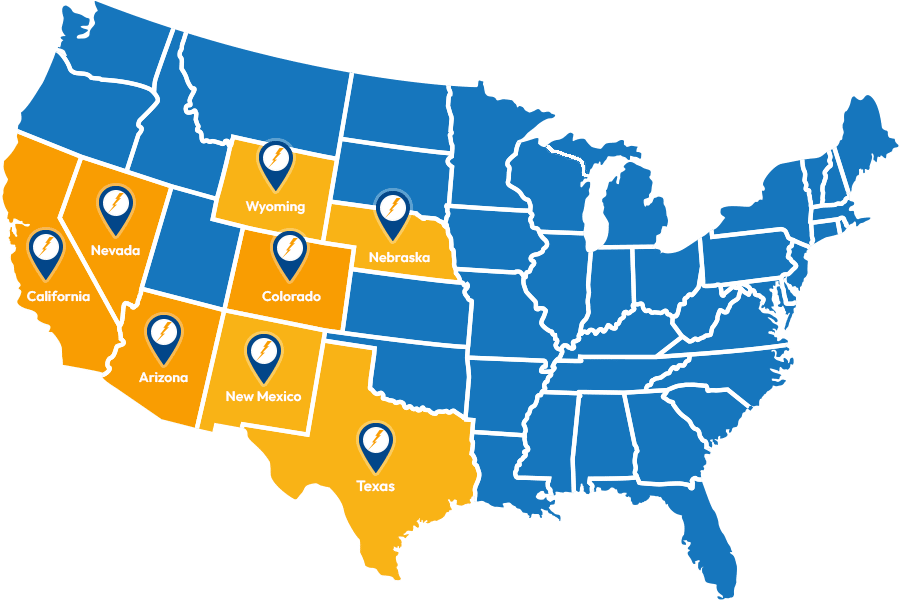

Service Locations

Expanded Service Area

Useful Links

Contact Information