Professional Data Center Design

The Necessity of a Professional Data Center Design Company Quality data center design is essential to business function. You depend on your data center to keep your system running and

We look forward to assisting you

The Necessity of a Professional Data Center Design Company Quality data center design is essential to business function. You depend on your data center to keep your system running and

Businesses that are ready to undertake a design center construction project need to know how to structure the project so the design center process results in the best structure for

When considering a data center cooling device, there are many types available to get the job done. In order to choose the one to best meet your needs, you should

It is always wise to hire a spe t, especially when it comes to technology. Data center construction companies often times have a computer room. Hiring a person with a

When it comes to business, power outages are no small concern. They can result in lost data and lost income, sometimes to the extent of ruining a small business. Any

Data center construction is a huge undertaking. Many things can go awry along the way. To avoid unnecessary frustration in the form of wasted time and resources, it is beneficial

Data center maintenance is an important factor in maintaining your equipment. With proper maintenance, you can prolong its life and reduce overall costs by continuing to use your current equipment.

Data center maintenance includes monitoring batteries and testing them appropriately. You need to find out how much charge is left and how long it will last. When conducting battery testing,

When choosing data center equipment, the first step is to take the requirements of the data storage and transmission, and separate them into physical servers. These servers are the computers

Managers who have designed the power distribution system for their data center equipment based on Kohler backup generators have found that their data centers are virtually uninterruptable. The reliability that

Your organization depends on its data center to provide the electronic information required for daily operations. There isn’t a single day when the data center isn’t used. It is accessed

One interesting fact about casino data center design is that it is run almost the same way as a comparable sized bank, even though it appears very different to the

Business never stops, so neither should your organization! There are people hard at work twenty-four hours a day, seven days a week. Like many others, your organization is connected to

Every business owner is interested in the long term growth and maturation of their business. It’s not enough to simply start a successful business; the real goal that every business

In many ways, the heart of any organization is its data center. This nexus of powerful computer hardware guides all the network functions of an organization, collects and stores a

Operating data centers is a booming market. More and more companies are moving to IP (Internet Protocol — online) networks for their company intranets. A growing number of companies hope

Managing a data center is a big job. Additionally, it is without question one of the most important elements of your high-tech organization. A data center contains all the information

When the data center your organization relies on has an emergency, you can’t afford any delays. A call has to be immediately placed to your data center maintenance firm for

A data center is a large, powerful system of computer components designed to provide the very highest level of network performance to an organization. Daily workflow, shifting resource demands, information

A data center operation is a lean operation that is designed to use both computer storage and input power as efficiently as possible. Because the technology is straightforward, and many

Titan Power was proud to be the “Golf Ball Sponsor” for this year’s 3rd Annual Arizona Technology Council Golf Tournament. As the “Golf Ball Sponsor,” our logo was printed on

Titan Power completed over #53 Construction projects. Project scopes ranged from electrical cabling, to a UPS installation to the complete design & build of a 2,000 square foot computer room.

Come visit Titan Power’s booth at the IFMA (International Facility Management Association) Facilities Expo: When: Wednesday, October 26th & Thursday, October 27th Where: Phoenix Convention Center The Wold Workplace Expo

Titan Power, Inc. currently holds a State of Arizona, Department of Administration, State Contract # EPS070086-A5 for Uninterruptible Power Supply (UPS) equipment and services. This “State Contract” allows Arizona governmental

Our Field Service Engineers try to get these “Call for Service” stickers on all of the Uninterruptible Power Supply (UPS) and Power Conditioning units we service so it is easier

Titan Power provided ASU with an 800kW rental generator for back-up power during their 6-week, Fall 2011 registration period. The generator is located alongside Palm Walk, which is a main

Titan Power is excited to announce the addition of two new Sales/Account Representatives to better serve our expanding customer base. Gene Reeck and Jack Gieseking have extensive experience in Account

Data Center & Computer Room Construction Data Centers, Computer Rooms and Mission Critical Facilities are very specialized and selecting the right contractor is one of the most important decisions you

Did you know Titan Power provides comprehensive and cost effective stand by generator services? We provide maintenance and repair services on most major brands including Caterpillar, Cummins, Katolite, Kohler and

In celebration of our 25th Anniversary, Titan Power is pleased to launch our new website! The new website has the same address www.titanpower.com but an updated look and feel. It is

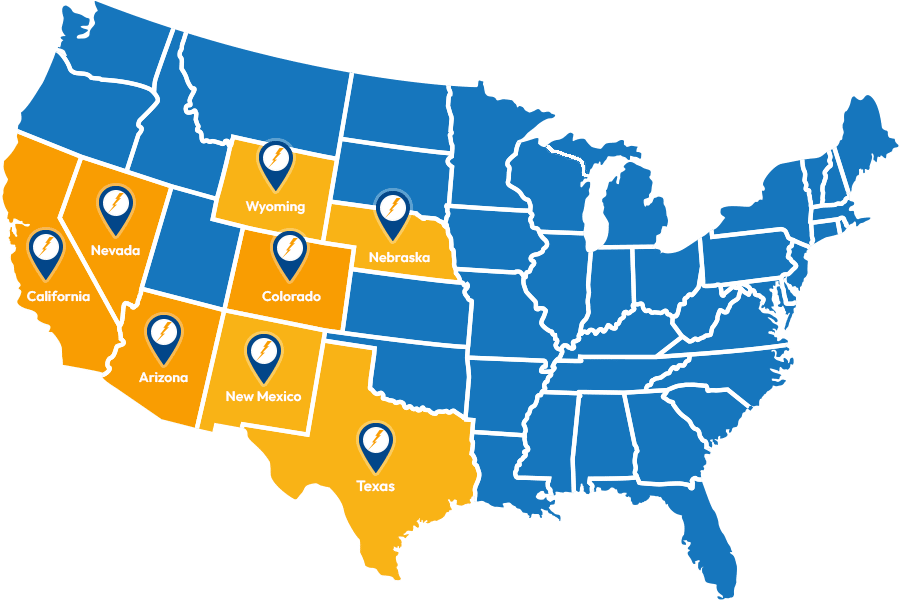

Service Locations

Expanded Service Area

Useful Links

Contact Information