Titan Power, Inc. Celebrates 25th Anniversary with New Website Launch

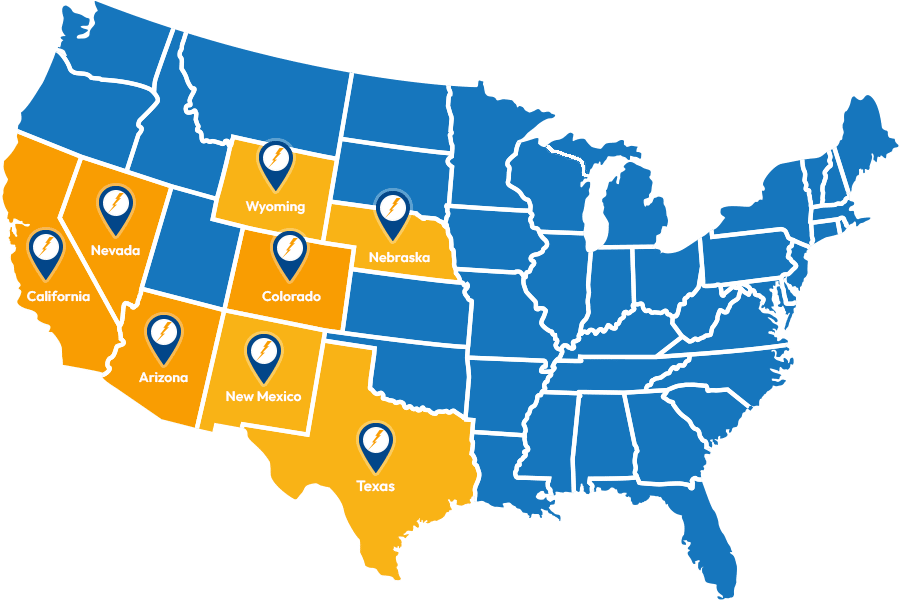

Titan Power, Phoenix, AZ extends its responsiveness with the launch of a new website. Features include overviews of data center construction, product information including sales and service of UPS,